Leveraging OpenAI For Task Automation

What’s the Goal?

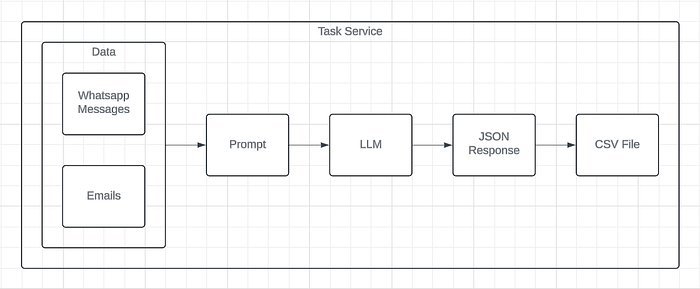

So I wanted to see if it was possible for us to use OpenAI to automate some of our most mundane tasks and I wanted to test it out to the limit. The solution presented isn’t perfect but it’s a good starting point. So I decided to throw in emails and Whatsapp messages and I asked OpenAI to detect and extract useful information about any sort of tasks that were being talked about.

So this meant that if Person A is texting Person B that you have to deliver pizza to a certain location, that task should automatically be added to our list without any human intervention whatsoever. The list should also be updated and modified accordingly as well. This is the starting point towards this kind of automation.

Making the Imports

from langchain.prompts import PromptTemplate

from langchain_openai import ChatOpenAI, OpenAI

from langchain.memory import ConversationBufferMemory

from langchain.chains.question_answering import load_qa_chain

import csv

import json

from langchain.schema import DocumentWe will be using LangChain to provide the platform for our solution. We are importing the json and csv libraries to extract and store the data. Our CSV will act as a default storage for the task list.

data = Document(page_content="Message goes here"

query = "Analyse the given messages and emails"

template = """You are an AI Project Manager specializes in extracting important information from the given emails \

and whatsapp messages. Use the retrieved context to answer the question. Use the JSON format below to add the relevant \

data. You must analyze the conversations and understand what task is being discussed. You must also identify the people \

who are involved. You must also attach TAGs to the data. You must also identify the status of the task. You must also \

identify the date of the task. You must also identify the ID of the task. You must use the entire context to respond \

with only one task json object.

Use the following JSON format:

{{

"ID": "The ID of the task",

"Description": "The description of the task",

"People: ["person 1", "person 2"],

"Date" "date",

"TAG": ["Unique Identifier related to the Project"],

"Status": "Completed, Processing, On-hold, In Transit or Rejected"

}}

CONTEXT:

{context}

QUESTION:

{question}

"""

prompt = PromptTemplate(

template=template,

input_variables=["context", "question"]

)This is probably the most important part of our solution; the prompt. Here we are not only describing to the LLM what it is supposed to detect but we’re also enforcing our own JSON format so that it is possible for us to parse that information. We are then using the standard PromptTemplate where the input variable context is our bundle of data comprising Whatsapp messages and emails.

chat_llm = ChatOpenAI(

openai_api_key="",

model= "gpt-3.5-turbo",

temperature=0,

verbose=True,

model_kwargs={"response_format": {"type": "json_object"}},

)

chain = load_qa_chain(

llm = chat_llm,

chain_type = "stuff",

prompt = prompt,

verbose = True

)

response = chain.run(

input_documents = [data],

question = query

)Here we are initialising our ChatOpenAI and then we are loading our chain. It is important to note that to successfully enforce a JSON format, you must add the response format instruction into the model_kwargs field as mentioned above. Then you can run the chain to get the response. You must remember that the input_documents will only accept a list of Document objects.

response_obj = json.loads(response)

flattened = {}

for key, value in response_obj.items():

if isinstance(value, list):

flattened[key] = ', '.join(value)

else:

flattened[key] = value

with open("data.csv", mode='a+', newline='') as file: # You can modify the file name here

writer = csv.DictWriter(file, fieldnames=flattened.keys())

if file.tell() == 0:

writer.writeheader()

writer.writerow(flattened)Once we have the response we can json.loads() to convert it to a Python object which is then added into the CSV file. Once it is in the CSV, it will be automatically fetched onto the web page.

This is a very rough solution which can do with a lot of optimization but it is a useful concept that can lead to amazing results. We can leverage these AI tools but the only drawback is the cost. For individual use, running LLMs locally can make it absolutely free of cost for us and that’s something that I will be exploring next.