Combining ChatGPT and Perplexity with LangGraph

Harnessing the power of ChatGPT and Perplexity within a LangGraph workflow opens up exciting possibilities for AI-driven applications! By structuring interactions as a dynamic graph, LangGraph enables seamless collaboration between multiple LLMs, allowing ChatGPT to refine queries while Perplexity fetches real-world insights. This modular, scalable approach transforms AI from a simple chatbot into a sophisticated knowledge engine — ready to deliver precise, data-backed intelligence at scale.

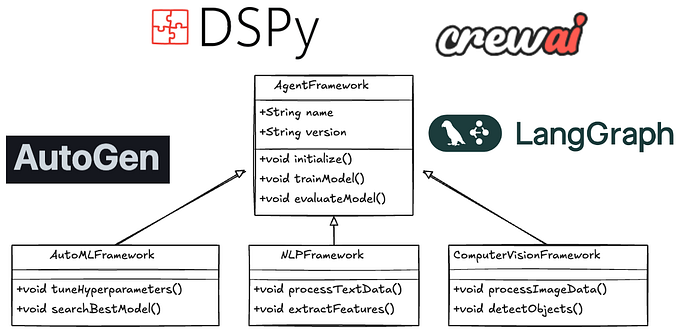

LangGraph: Orchestrating LLM Interactions

LangGraph revolutionizes the way LLM-driven applications are built by replacing rigid, linear workflows with a dynamic, graph-based structure. Each node in the graph represents a distinct task or LLM interaction, enabling seamless coordination between multiple models. With support for conditional branching, parallel execution, and adaptive decision-making, LangGraph empowers developers to build intelligent, scalable, and highly responsive AI systems tailored for complex real-world applications.

Perplexity: Enhanced Information Retrieval with LLMs

Perplexity brings a cutting-edge suite of LLMs designed specifically for high-accuracy information retrieval and question-answering. Trained on vast, diverse datasets, these models excel at delivering precise, well-researched, and contextually relevant responses. By integrating Perplexity into a LangChain application, developers can supercharge their AI systems with real-time data access, ensuring that users receive the most up-to-date and comprehensive insights available.

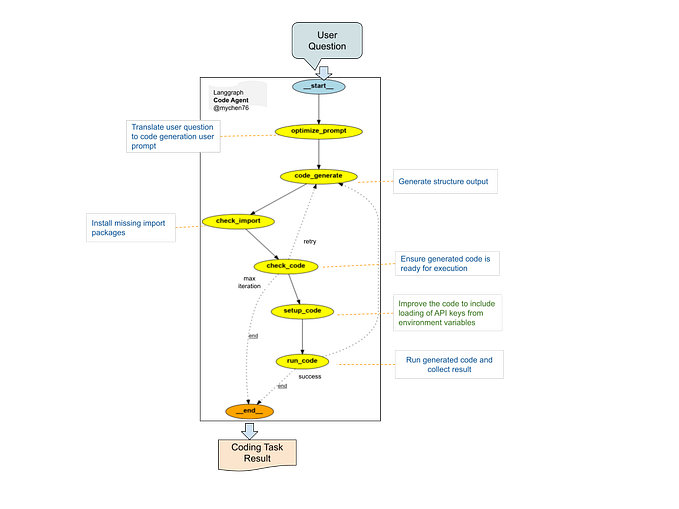

A Simple Commodity Information Workflow

This article focuses on a simplified workflow that combines ChatGPT and Perplexity to retrieve information related to a user’s query about a commodity. The workflow first uses ChatGPT to summarize the user’s query and then uses Perplexity to provide more detailed market information related to the query.

Code Walkthrough: Step-by-Step Explanation

Let’s dissect the Python code that implements this simplified workflow:

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain_community.chat_models import ChatPerplexity

from typing import Any, Dict, Optional

import logging

import sys

import json

# Define the State (TypedDict)

class State(TypedDict):

user_query: str

chatgpt_response: str

perplexity_results: str

error: Optional[str]State Definition: The State TypedDict defines the structure for storing information as the workflow progresses. It holds the user's query, the ChatGPT response, the Perplexity results, and any potential errors.

# Define the SimpleCommoditySearch class

class SimpleCommoditySearch:

def __init__(self, openai_api_key: str, pplx_api_key: str, log_file: str = 'commodity_search.log'):

self.openai_api_key = openai_api_key

self.pplx_api_key = pplx_api_key

self._setup_workflow()

def _setup_workflow(self):

workflow = StateGraph(State)

# Define the nodes of the graph

workflow.add_node("chatgpt_interaction", self._chatgpt_interaction)

workflow.add_node("perplexity_search", self._perplexity_search)

# Define the edges connecting the nodes

workflow.add_edge(START, "chatgpt_interaction")

workflow.add_edge("chatgpt_interaction", "perplexity_search")

workflow.add_edge("perplexity_search", END)

self.chain = workflow.compile()SimpleCommoditySearch Class: This class encapsulates the entire workflow.

__init__: Initializes the class with API keys and sets up logging. Crucially, it calls _setup_workflow to define the LangGraph structure.

_setup_workflow: Defines the LangGraph workflow as a directed graph. It adds two nodes: chatgpt_interaction and perplexity_search, and connects them with edges to define the flow of execution.

def _chatgpt_interaction(self, state: State) -> Dict[str, Any]:

try:

llm = ChatOpenAI(model="gpt-4o-mini", openai_api_key=self.openai_api_key) # Or gpt-4

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Summarize the user query."),

("human", "{user_query}")

])

formatted_prompt = prompt.format(user_query=state["user_query"])

response = llm.invoke(formatted_prompt)

self.logger.info("ChatGPT interaction successful.")

return {"chatgpt_response": response.content, "error": None}

except Exception as e:

self.logger.error(f"ChatGPT interaction error: {e}")

return {"chatgpt_response": "", "error": str(e)}_chatgpt_interaction: This function interacts with ChatGPT. It takes the user's query from thestate, uses a ChatPromptTemplate to construct a prompt, and invokes the ChatGPT LLM. The response is stored in the chatgpt_response field of the state.

def _perplexity_search(self, state: Dict[str, Any]) -> Dict[str, Any]:

try:

chat_model = ChatPerplexity(

temperature=0,

pplx_api_key=self.pplx_api_key,

model="llama-3.1-sonar-small-128k-online" # Or another appropriate model

)

user_query = state["user_query"] # Use the original user query

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant providing commodity market information."),

("human", f"What is the current market situation related to: {user_query}? Provide recent data and analysis.")

])

formatted_prompt = prompt.format() # No need to pass variables since user_query is used directly

response = chat_model.invoke(formatted_prompt)

self.logger.info("Perplexity search successful.")

return {"perplexity_results": response.content, "error": None}

except Exception as e:

self.logger.error(f"Perplexity search error: {e}")

return {"perplexity_results": "", "error": str(e)}_perplexity_search: This function interacts with Perplexity. It also takes the user's query from thestate, constructs a prompt, and invokes the Perplexity LLM using the ChatPerplexity class. The results are stored in the perplexity_results field of the state.

async def process_query(self, query: str) -> Dict[str, Any]:

try:

state = self.chain.invoke({"user_query": query})

return state # Return the complete state

except Exception as e:

self.logger.error(f"Error processing query: {e}")

return {"user_query": query, "chatgpt_response": "", "perplexity_results": "", "error": str(e)}process_query: This asynchronous function is the entry point for processing user queries.7 It invokes the LangGraph chain with the user's query and returns the complete state dictionary, containing the results from both the ChatGPT and Perplexity interactions.

# Example usage:

async def main():

openai_api_key = "YOUR_OPENAI_API_KEY" # Replace with your actual key

pplx_api_key = "YOUR_PERPLEXITY_API_KEY" # Replace with your actual key

commodity_search = SimpleCommoditySearch(openai_api_key, pplx_api_key)

query = "Price of wheat in Chicago"

result = await commodity_search.process_query(query)

print(json.dumps(result, indent=4)) # Print the result nicely formatted

if __name__ == "__main__":

import asyncio

asyncio.run(main())Example Usage: The main function demonstrates how to use the SimpleCommoditySearch class. It creates an instance of the class, provides a sample query, and prints the results using json.dumps formatted output. Remember to replace the placeholder API keys with your actual keys.

Running the Code

To run this code, you will need to install the necessary libraries: langchain, langchain-openai, langchain-community, and typing-extensions. You will also need to obtain API keys for both OpenAI and Perplexity and set them as environment variables or provide them directly in the code (not recommended for production).

By combining the conversational prowess of ChatGPT with Perplexity’s powerful information retrieval, LangGraph unlocks a whole new level of AI-driven workflows! This dynamic duo transforms raw user queries into precise, data-backed insights, all within a structured, stateful graph. With the flexibility to scale, adapt, and integrate more LLMs, this approach paves the way for smarter, more responsive AI applications that don’t just answer questions — they deliver intelligence.