Building LLM Apps with LangChain

What are LLMs

Large Language Models (LLMs) such as OpenAI’s GPT-3.5 (and the newer GPT-4) represent advanced artificial intelligence models. ChatGPT, built upon this LLM, is crafted to comprehend and produce human-like text responses given a particular input. These models undergo extensive training with vast datasets to grasp the intricacies and patterns of language, allowing them to execute diverse natural language processing tasks, including but not limited to text completion, translation, summarization, and beyond. Their applications span a wide range, from chatbots to content generation and various language understanding tasks.

What is LangChain

LangChain is an open-source framework designed to enable software developers in the field of artificial intelligence (AI) and machine learning to integrate large language models (LLMs) with external components for the development of applications. The objective of LangChain is to connect robust LLMs, including but not limited to OpenAI’s GPT-3.5 and GPT-4, with a variety of external data sources. This integration aims to facilitate the creation and utilization of natural language processing (NLP) applications, allowing developers to harness the capabilities of powerful language models.

The Fundamentals

LangChain has many functionalities, including but not limited to models models, prompts, parsers and templates. Models are the language models that it utilizes. A prompt is defined as the style of input that is passed to the model. The output pulls the result from the model and parses it into structured data. Templates are used to maximise a model’s efficiency by using pre-tested prompts that give the best results.

template_string = """Translate the text \

that is delimited by double backticks \

with a style that is {style}. \

text: ``{text}``

"""

user_style = """British English \

in an excited and rushed tone

"""

user_email = """

Les fleurs égayent chaque \

jour avec leurs couleurs vibrantes, \

répandant la joie et la beauté \

naturelle dans notre monde.

"""

user_messages = prompt_template.format_messages(

style=user_style,

text=user_email)LangChain Memory System

Conversation Buffer Memory: This is the most basic type of memory. It stores your conversation history with the model and allows it to store temporary data.

memory = ConversationBufferMemory()Conversation Buffer Window Memory: This type of memory introduces the idea of a window. The window can be set to the number of message exchanges that have occurred. So if this variable is set to one, it will only retain memory from the last exchange.

memory = ConversationBufferWindowMemory(k=1) Conversation Token Memory: Token memory allows you to set the number of tokens it will store in memory. Once it reaches the limit of tokens, it will cut off the remaining conversation history.

memory = ConversationTokenBufferMemory(llm=llm, max_token_limit=50)Conversation Summary Memory: The summary variant of the memory system is also an interesting feature. You can give a long string of information to save in it’s context and it will the model (under-the-hood) to create a summary of that string and use that later. Here we also have a max token limit similar to the previous system.

memory = ConversationSummaryBufferMemory(llm=llm, max_token_limit=100)The Chains

LLM Chain: This is the simplest chain. It will take an LLM model and a prompt as parameters and return a simple response.

chain = LLMChain(llm=llm, prompt=prompt)Sequential Chain: There are two types of sequential chains. One is linear where one chain builds onto another chain. This is the simple chain. The second kind allows multiple chains to intersect as well and different variables can play roles in the chaining.

#Simple Sequential Chain

overall_simple_chain = SimpleSequentialChain(chains=[chain_one, chain_two],

verbose=True

)

#Sequential Chain

overall_chain = SequentialChain(

chains=[chain_one, chain_two, chain_three, chain_four],

input_variables=["Variable1"],

output_variables=["Variable2", "Variable3","Variable4"],

verbose=True

)Router Chain: This type of chain acts like a network router and it enables the system to pick and choose a certain type of chain to work with. For example, if you want to get a certain type of science question answered, the router could help in picking the right chain depending on if the question is relevant to physics, biology or chemistry. It also includes a default option so if there is no specific chain found, it will retrieve the response from the general body of data.

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(

destinations=destinations_str

)

router_prompt = PromptTemplate(

template=router_template,

input_variables=["input"],

output_parser=RouterOutputParser(),

)

router_chain = LLMRouterChain.from_llm(llm, router_prompt)

chain = MultiPromptChain(router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain, verbose=True

)Vector Stores and Embeddings

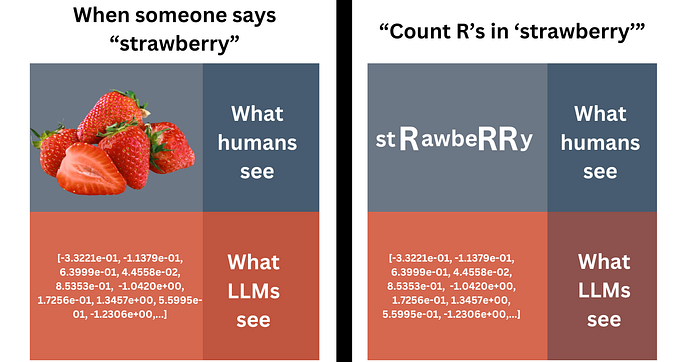

Vector databases handle mathematical representations of data points, known as vectors, in multi-dimensional space. Embeddings transform data like words into continuous vectors. Vector databases efficiently store and query these representations. Vector stores are very different from relational databases or document databases and they store a very different type of data. You can use the OpenAIEmbeddings to create embeddings of your data which you can then store in the vector store. This is what the code looks like:

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

embed = embeddings.embed_query("I am Luke Skywalker!")

print(embed[:5])

#Resultant embedding

#[-0.014382644556462765, -0.02181452140212059, -0.01809225231409073, -0.03127212077379227, -0.028461430221796036]Agents

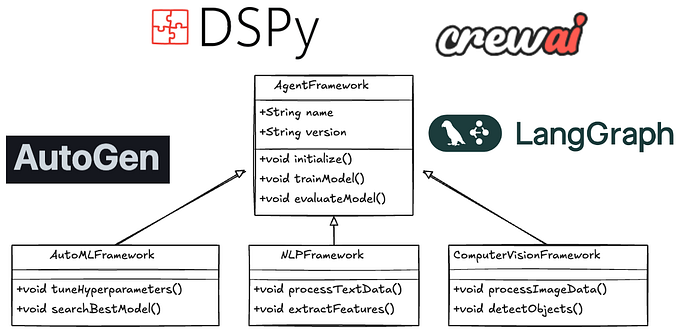

Agents can be explained a manifestation of a unique model. Agents also have a number of types and they can be equipped with tools and it can boost their performance. Agents will perform actions depending on how they are configured. For example, we can have a Math agent that is highly trained in solving math problems and we can have another agent that can write Python code.

agent= initialize_agent(

tools,

llm,

agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,

handle_parsing_errors=True,

verbose = True)

agent = create_python_agent(

llm,

tool=PythonREPLTool(),

verbose=True

)Splitting, Loading and Retrieval

LangChain also provides many other features that help you to work with documents. You can load documents (data) from PDFs, CSVs and even from YouTube or Notion. You can then split the data into chunks using any one of the many splitters included in LangChain. You can retrieve the data using many types of retrieval mechanisms such as MMR, LLM-aided Retrieval or certain types of native retrieval techniques such as map_reduce, refine or map_rerank. It also allows you to compress the data and chunks you are storing.

The future of Large Language Models (LLMs) like OpenAI’s GPT series is poised for remarkable advancements. Anticipated developments include enhanced language understanding, increased versatility across industries, and the evolution of more customizable and specialized models. The integration of LLMs with other cutting-edge technologies, such as computer vision, is likely to create comprehensive AI systems. Ongoing research and iterations, exemplified by potential releases like GPT-4, will drive continuous progress, pushing the boundaries of what these models can achieve.

![[LangGraph] Self-Correcting Code Assistant + Rag Search](https://miro.medium.com/v2/resize:fit:679/0*fCPPvaoVMpArbASS.png)