A Multi-Layer RAG Chatbot with LangChain’s SQL Agent and MySQL

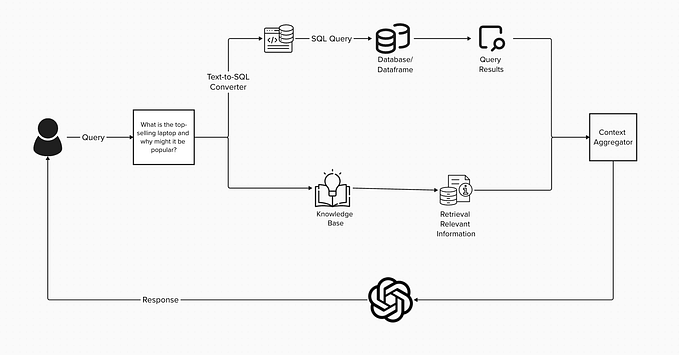

Chatbots are all the craze these days and RAG is a popular mechanism that is being thrown everywhere. In a more traditional sense, RAG is predominantly described as being a mechanism that help your model ingest a lot of that and then retrieve from that data whatever information you want. Today, I’ll introduce you to another amazing dimension of RAG where instead of documents, we will be retrieving data directly from a MySQL database. Adding into this, we are also solving another important problem that exists in the SQL Agent that we will be using. The SQL Agent does not work well with chat History so here I’ve built a Multi-Layer architecture to allow you to incorporate and use chat History when working with SQL Agents.

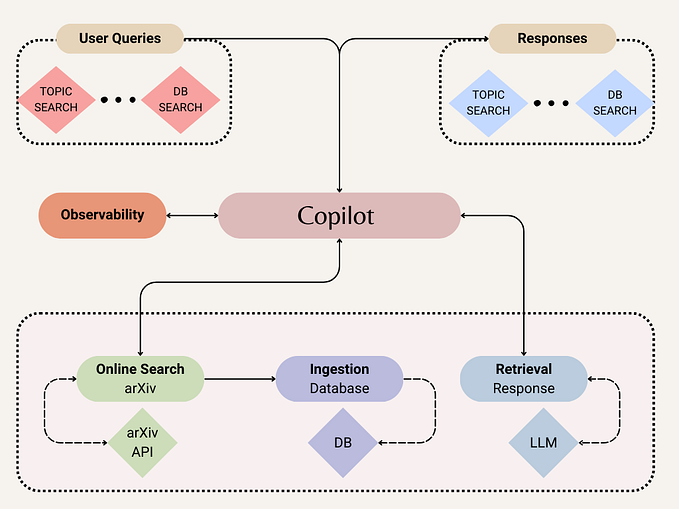

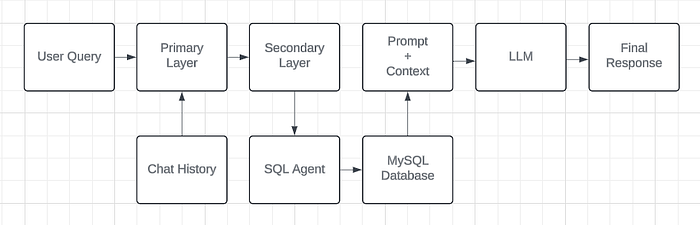

Architecture

The architecture here is an overview of the workflow. The primary layer itself will use the chat history with the basic Chain to generate a new and improved query which is then passed to the secondary layer. Here we use our SQL Agent that will directly run queries on your MySQL database and get the required data. Finally, this retrieved context is passed onto the LLM along with the prompt and voila! we have a working SQL Agent that can look into your chat history.

The function of the primary layer to allow us to use our chat history as our context so that when the data is passed to the LLM, it will have background knowledge relevant to our query. We also ask in our primary prompt to improve the query overall so that it is easier to pick for the SQL Agent.

self.chat_llm = ChatOpenAI(

openai_api_key=settings.get("langchain.openai_api_key"),

model= llm_model_name,

temperature=0.2,

verbose=True,

model_kwargs={"response_format": {"type": "json_object"}},

)

self.memory = ConversationBufferMemory(

memory_key = "chat_history",

input_key = "question",

return_messages = True

)

template = """

You are a query improvement bot that will use the chat_history provided to improved the user's query.

Return your response in the following JSON format:

{{

"question": "Your response"

}}

If the user's query lacks context, you will use the chat_history to build it.

If the chat_history is not relevant to the question mentioned, forward the same question forward

Question: {question}

Chat History: {chat_history}

Context: {context}

"""

self.prompt = PromptTemplate(

template = template,

input_variables = ["question", "chat_history", "context"]

) In the code block above we are initiating our LLM using the ChatOpenAI function from LangChain. After that we instantiate our memory using the ConversationBufferMemory. Finally, we set up out prompt using the PromptTemplate and the prompt is very specific to our use case.

def get_improved_query(

self,

query,

chat_history: list[SessionMessageBase]):

self.chain = load_qa_chain(

llm = self.chat_llm,

chain_type = "stuff",

memory = self.memory,

prompt = self.prompt,

verbose = True

)

self.memory.clear()

print("chat_history", chat_history)

for message in chat_history:

self.memory.chat_memory.add_user_message(message.query)

self.memory.chat_memory.add_ai_message(str(message.response))

response = self.chain.run(

input_documents=[],

question=query

)

print("response", response)

return response In this next snippet of code we are creating our QA Chain using the variables defined in the previous code block. We’re adding our messages to the chat history and then we are running the chain to get the improved query. Note that the input_documents have been left empty on purpose.

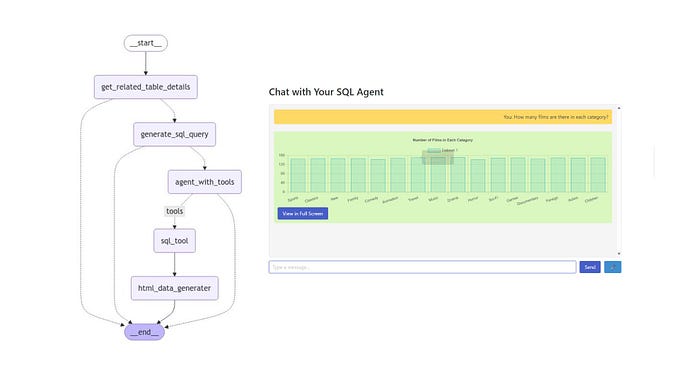

Secondary Layer: SQL Agent

The secondary layer is where the magic happens. The SQL Agent from LangChain is pretty amazing. On the surface, you’ll never understand how it works but there’s a lot going on behind the scenes. So the SQL Agent starts off by taking your question and then it asks the LLM to create an SQL query based on your question. The query is then ran on your MySQL database using a built-in function. The response data from the database is then sent to the LLM again along with the original question and then the final chat response is delivered from the LLM. I cannot stress enough on how awesome this is. This is a new type of RAG retriever that can easily get attached to your database, allowing easy and seamless connection between your data and the chatbot.

self.db = SQLDatabase.from_uri(database_url)

self.chat_llm = ChatOpenAI(

openai_api_key="OpenAI-Key",

model= llm_model_name,

temperature=0,

verbose=True,

model_kwargs={"response_format": {"type": "json_object"}},

) Here we are initiating multiple variables. We start with the database using the SQLDatabase.from_uri() which takes in the database URL. Next we instantiate the LLM just like before but this time we add in a special parameter called model_kwargs and we set the response format to JSON so it is easier for us to get the data and parse it.

self.system = """You are an agent designed to interact with a SQL database.

Given an input question, create a syntactically correct MySQL query to run, then look at the results of the query and return the answer.

Unless the user specifies a specific number of examples they wish to obtain, always limit your query to at most 3 results.

You can order the results by a relevant column to return the most interesting examples in the database.

Never query for all the columns from a specific table, only ask for the relevant columns given the question.

You have access to tools for interacting with the database.

Only use the given tools. Only use the information returned by the tools to construct your final answer.

Only use the results of the given SQL query to generate your final answer and return that.

You MUST double check your query before executing it. If you get an error while executing a query then you should stop!

DO NOT make any DML statements (INSERT, UPDATE, DELETE, DROP etc.) to the database.

Only create an SQL statement ONCE!"""

self.prompt = ChatPromptTemplate.from_messages(

[("system", self.system), ("human", "{input}"), MessagesPlaceholder(variable_name="agent_scratchpad")]

) The prompt for the SQL Agent is a bit different. Here we’re using the ChatPromptTemplate and if you really look into it, you’ll see how it is specifically written to create and run SQL queries.

def create_sql_agent(self):

return create_sql_agent(

llm=self.chat_llm,

db=self.db,

prompt=self.prompt,

agent_type="openai-tools",

verbose=True,

)This is the function that let’s you create SQL Agents. It takes in an LLM, a database, a prompt and the agent_type specifically has to be “openai-tools”.

improved_query = json.loads(BaseAgent.get_improved_query(query, chat_history))

response = self.agent.invoke({"input": improved_query.get("question")})This is the final part of the code that will first run the primary layer to get the improved query and then we use that improved query to get our final response.

Takeaways

Key takeaways from this solution is that it is a DIY mechanism that I built according to my use case. I’m sure sooner or later the LangChain community should find an obvious solution for this where they can cater for the chat history with the SQL Agent. For now, this is something that can be really helpful for you if you’re looking to incorporating chat history with your own chatbot that can query directly into your database.

Happy coding!